The Future of Health

Once again the NHS is front of centre of the upcoming general election in the UK. It is regularly pointed out that no government has ever cut the NHS budget. And yet NHS funding is always a key electoral issue, despite all of the major parties having essentially identical policy.

I think this is emblematic of a wider problem: that our expectations for what healthcare can achieve grow every year, and yet for every extra dollar spent on health we see a diminishing return.

Chronic disease management is responsible for 80% of NHS expenditure. This, to me, was an astonishing fact to discover. It flies in the face of what we expect from modern medicine. Perhaps these expectations have their roots in our childhood experiences with illness: that we have a chesty cough, or maybe break a bone, we have some interaction with a doctor or hospital, a course of treatment is planned, and we recover to our prior state.

Talk to any doctor working in a developed nation and you will quickly discover that this is simply not the job of the modern healthcare professional. A typical hospital patient is over the age of 60 and suffers from a complex web of interacting diseases. It is truly a marvel that, thanks to modern medicine, such people can live full and comfortable lives for many years. Whether it is realistic that a health system whose structure is fundamentally unchanged from over half a century ago can sustain this burden is one of the most pressing questions for any developed nation.

Like climate change, this is a problem that cannot be solved by political will alone. Technology must be a large part of the answer.

We’ve spent the last thirty years or so installing incredibly high bandwidth communication channels just about everywhere, but when it comes to healthcare, we’re only just beginning to take advantage of this beyond the most trivial use cases (such as being able to have a doctor’s appointment over video chat and then have prescriptions posted to your door). Instead, we should be looking at every interaction between healthcare professionals and patients and asking these questions:

- Who needs to be in the room?

- What equipment needs to be in the physical proximity of the patient?

- How often should this take place?

- What would happen in an emergency?

In answering these questions, we can begin to see situations where much of the cost of these interactions can be eliminated. As the cost structure of care changes, the role of care can be transformed from being one of diagnosis followed by prescription, to one of ongoing monitoring and treatment.

The most famous example of this paradigm is in the treatment of diabetes. Starting in the 19th century, diabetes was understood to be related to having sweet-tasting urine. This eventually evolved into more scientific tests of glucose levels in urine and later blood, with new chemical techniques developed to improve specificity and sensitivity of the tests [1].

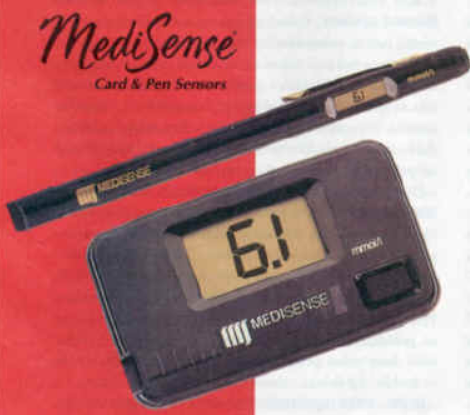

Early electrochemical Point-of-Care blood glucose monitoring kit

The shift in the cost structure of assessing blood glucose levels fundamentally transformed the quality of life of diabetes sufferers. Only by taking measurements multiple times a day could normal glucose levels be maintained, and technology has allowed these measurements to become ever more accurate, convenient and frequent [2].

Although modern diabetes management represents the culmination of some brilliant engineering in the fields of electrochemistry, molecular biology (to synthesise insulin) and product design, it is nonetheless the low hanging fruit of chronic disease management. In managing diabetes we are essentially optimising a single parameter - blood glucose levels - using a very simple medicine which is as close a replica as possible of natural insulin, a hormone produced naturally in healthy humans. Not only that, but glucose is one of the most abundant biomarkers in blood, and is typically found in a concentration of 5 mmol/L - significantly higher than, say, testosterone, which is more than 100 times less abundant, and therefore 100 times harder to detect. Harder yet is sequencing individual strands of RNA.

Portable, low-cost gene sequencing devices will, I think, begin to find their way into routine care by analysing small blood samples for particular RNA strands associated with certain diseases - for instance, in monitoring the real-time viral load in HIV sufferers. Oxford Nanopore is building just that, and I am excited for the day when these tiny, low-cost gene sequencers are so abundant that we can routinely perform massive studies of N > 10000 and see what kinds of correlations jump out.

But why not do the same with ultrasound?

Whilst the power of blood tests for diagnosis and disease monitoring is firmly lodged in the public consciousness, the idea of using ultrasound is not. I don’t see why it shouldn’t be: we already use ultrasound routinely in diagnosis within hospitals, so why not take the machines out of the hospitals, as we did with portable blood testing? Why not see what new clinical care pathways or possibilities in chronic disease management can be opened up thanks to the (already underway) commodification of ultrasound hardware?

I think there are a few reasons for this:

- Ultrasound is highly operator dependent. It’s not simply a matter of interpreting the image but actually lining up the probe correctly and knowing what you’re searching for

- Ultrasound is not “data” in the same way as a full blood count or sequenced gene is, so it’s unclear how to use an ultrasound scan as part of a closed feedback loop for disease management

- There is no big database of ultrasound data as there is for MRI, so it’s harder to train machine learning models to detect features

I believe that all of these problems have recently become solvable, and that solving them will bring potentially enormous benefits to humanity. (1) is solvable thanks to the aforementioned ubiquitous high bandwidth connectivity from 4G/5G, WiFi, etc. (2) is solvable thanks to the advent of AI/machine learning. And (3) is solvable thanks to the introduction of low-cost handheld ultrasound machines which can be cheaply deployed en masse to collect data from thousands of patients affordably.

Although the future of healthcare holds some daunting challenges, the technological ecosystem today is very different to what it was seventy years ago when the NHS was founded. To state this seems almost tautological, and yet I’m convinced that the way we look at healthcare today - as something which revolves around how many hospital beds we as a society can afford - will seem terribly dated in the quite near future. This space is full of opportunities, and I can’t wait to see what people come up with.

References

[1] N. Moodley, U. Ngxamngxa, M. J. Turzyniecka, and T. S. Pillay, ‘Historical perspectives in clinical pathology: a history of glucose measurement’, Journal of Clinical Pathology, vol. 68, no. 4, pp. 258–264, Apr. 2015.

[2] S. F. Clarke and J. R. Foster, ‘A history of blood glucose meters and their role in self-monitoring of diabetes mellitus’, British Journal of Biomedical Science, vol. 69, no. 2, pp. 83–93, Jan. 2012.